Biography

Junbo Yin is currently a postdoctoral researcher in the Structural and Functional Bioinformatics Group led by Prof. Xin Gao at King Abdullah University of Science and Technology (KAUST). He received his Ph.D. degree in Computer Science and Technology from Beijing Institute of Technology (BIT) in 2024, under the supervision of Prof. Jianbing Shen. During 2022-2023, he was a visiting Ph.D. student at the École Polytechnique Fédérale de Lausanne (EPFL), supervised by Prof. Pascal Frossard. His research interests include 3D object detection, self-supervised learning, and domain adaptation. He has published 18 conference and journal papers in venues such as CVPR, ICCV, ECCV, and TPAMI, with over 1300 citations on Google Scholar. He has received several prestigious scholarships and honorary titles, including the Excellent Graduate Student Award, the Special Graduate Scholarship, and the Huawei Scholarship. Additionally, he has been awarded by the Zhejiang Lab’s International Talent Fund for Young Professionals.

- 3D Object Detection & Tracking

- Multimodal Learning

- Autonomous Driving

- AI for Science

Ph.D. in Computer Vision, 2024

Beijing Institute of Technology

MEng in Pattern Recognition and Intelligent System, 2018

Beijing Institute of Technology

BSc in Automation, 2016

North China Electric Power University

News

- 2024.07: 🎉🎉 one paper is accepted to ECCV'24.

- 2024.06: 🎉🎉 one paper is accepted as a Highlight Paper to CVPR‘24.

- 2024.02: 🎉🎉 one papers is accepted to AAAI‘24.

- 2023.10: 🎉🎉 one paper is accepted to ICCV‘23.

- 2023.02: 🎉🎉 two papers are accepted to AAAI‘23.

- 2022.10: 🎉🎉 we are 2nd in ECCV'22 LiDAR Self-Supervised Learning Challenge.

- 2022.10: 🎉🎉 three papers are accepted to ECCV'22.

- 2021.12: 🎉🎉 one papers is accepted to TPAMI.

- 2021.10: 🎉🎉 we are 3rd in ICCV'21 SSLAD Workshop.

- 2021.05: 🎉🎉 we are 1st in ICRA'21 AI Driving Olympics.

- 2020.06: 🎉🎉 three papers are accepted to CVPR'20.

Recent Publications

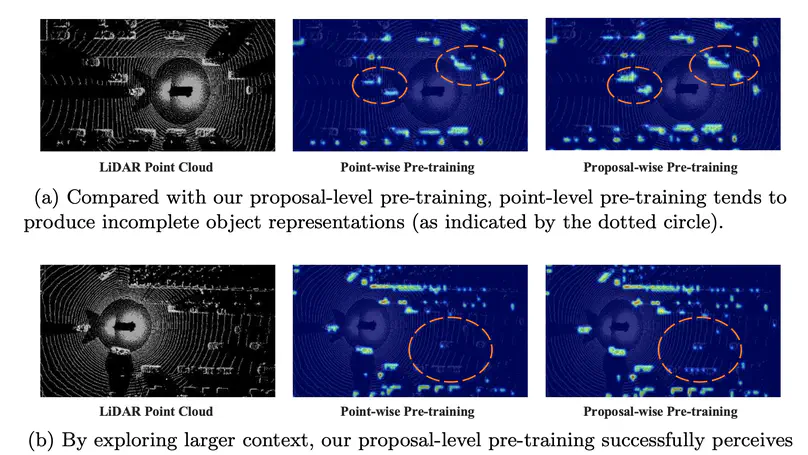

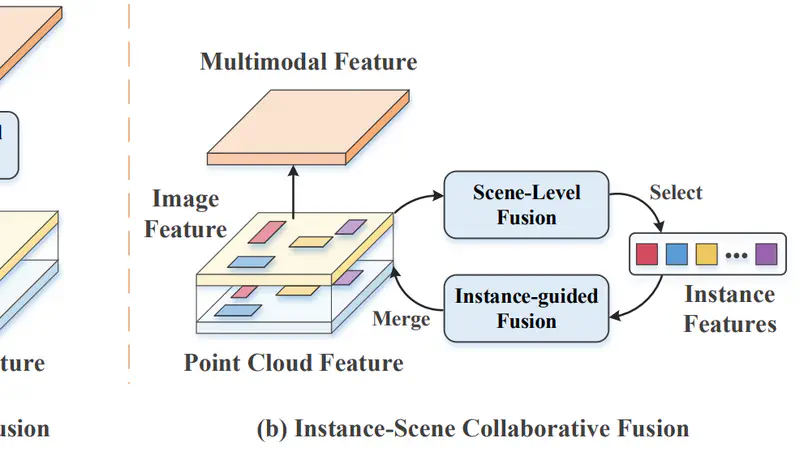

A novel multimodal 3D object detection algorithm, IS-Fusion, is presented in this work. It enhances the previous multimodal BEV representation by incorporating instance-level information, which significantly improves detection performance. IS-Fusion achieves the best performance among the published works to date.

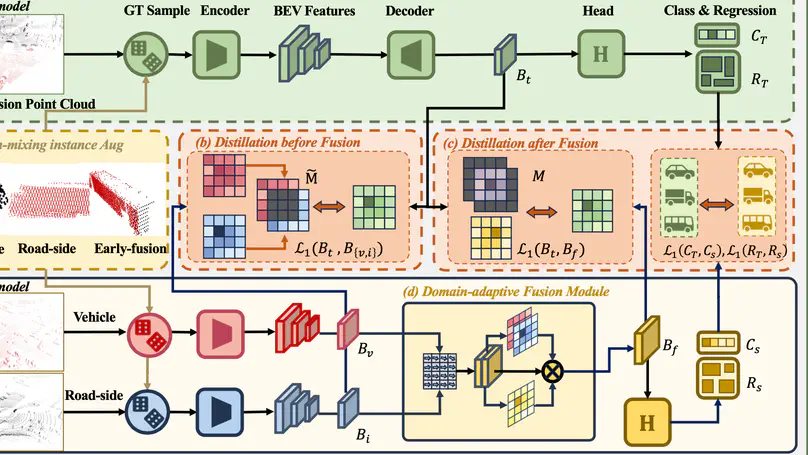

We propose a new collaborative 3D object detection algorithm (DI-V2X) aimed at minimizing the domain discrepancy of the input data from various sources, including vehicles and infrastructure. Our approach features three key modules, the Domain-Mixing Instance Augmentation (DMA) module, the Progressive Domain-Invariant Distillation (PDD) module, and the Domain-Adaptive Fusion (DAF) module. DI-V2X achieves the best performance in V2X perception.

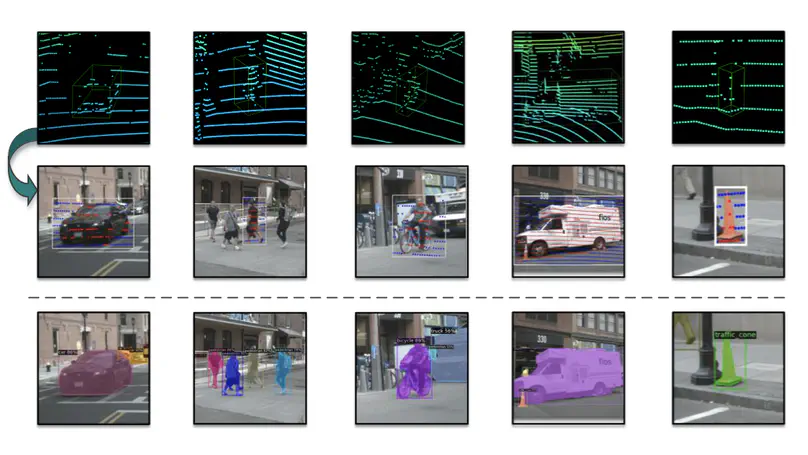

We present a novel learning paradigm, LWSIS, that inherits the fruits of off-the-shelf 3D point cloud to guide the training of 2D instance segmentation models to save mask-level annotations. We advocate a new dataset nuInsSeg based on nuScenes to extend existing 3D LiDAR annotations with 2D image segmentation annotations.

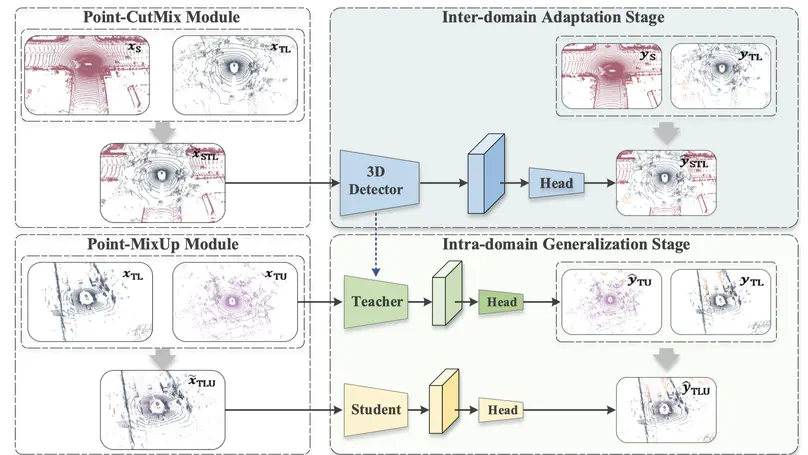

We prsent SSDA3D in this work, which is the first effort for semi-supervised domain adaptation in the context of 3D object detection. SSDA3D is achieved by a novel framework that jointly addresses inter-domain adaptation and intra-domain generalization.

Activities

Reviewer:

- IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

- IEEE Transactions on Image Processing (TIP)

- IEEE Transactions on Neural Networks and Learning Systems (TNNLS)

- IEEE Robotics and Automation Letters (RAL)

- Pattern Recognition (PR)

- Neurocomputing (NEUCOM)